February 19th, 2008

You’re the only one in the world using your coding standard. So it’s not really “standard”.

February 19th, 2008

You’re the only one in the world using your coding standard. So it’s not really “standard”.

February 19th, 2008

Self-commenting code is a joke. It’s a good excuse for your laziness. If the code is so obvious you don’t need a comment for it, it’s probably not doing anything interesting. Code worth writing needs comments, or even a full document. Self-commenting code is just not enough.

February 19th, 2008

Refactoring 3rd party libraries is a criminal waste of time.

a) you may introduce new bugs while rewriting the code. Since you did not write the original code, they will be quite difficult to find and fix.

b) if a new version of the 3rd party library gets released, you have to either refactor it again, or live with the previous version. This possibly prevents you from getting important bug fixes or optimizations.

c) it is a complete illusion to believe you will understand the 3rd party code just because it follows your coding standard.

If you don’t know anything about the separating axis theorem, there is no way you will really “understand” an OBB-OBB overlap test. You will understand a given line, locally, but you will not understand why it is there, and how the algorithm works, globally. Finding a bug within the code is not going to be easier just because it follows your coding conventions (in particular if the bug is like this one).

February 18th, 2008

(Re-posted as a proper entry since it’s apparently impossible to format comments properly in this blog)

I was trying to answer the question from “George” in another post. So, as I was saying, there’s no big difficulty in this one:

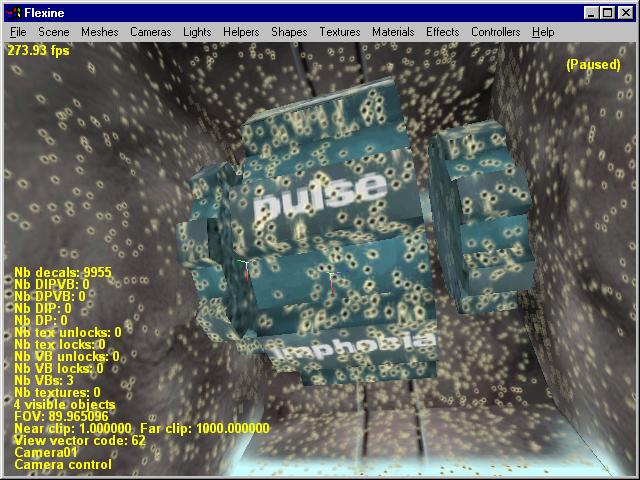

- First the decal is created. I use the clipping code from Eric Lengyel in GPG2. The output is an arbitrary number of triangles. Let’s call that the decal geometry.

- The decal geometry is passed to a decal manager, along with an owner (the mesh upon wich the decal has been created). The geometry is copied to a cycling vertex buffer. The start index within the VB and the number of vertices for this decal are recorded. Those two values form an interval [a;b] within the vertex buffer, with:

a = start index

b = start index + number of decal vertices

I also bind the decal to its owner, i.e. any mesh can enumerate its decals if necessary.

- Decal culling is implicit: I just reuse mesh culling. I call the decal manager for each visible mesh, with something like this:

DecalManager->StartRender()

for each visible mesh

DecalManager->RenderMeshDecals(CurrentMesh)

DecalManager->EndRender()

- “RenderMeshDecals” enumerates all the decals of input mesh. As said before, each decal is an interval in the cycling vertex buffer. Intervals are pushed to a dynamic buffer but not rendered yet.

- “EndRender” performs an “interval reduction” pass on previous interval buffer. That is, intervals [a;b] and [b;c] are merged into a single interval [a;c]. Intervals [a;b], [b;c] and [c;d] would also be reduced to a single interval [a;d]. This is just to reduce the number of DrawPrimitive calls. For each remaining interval, there’s a DrawPrimitive call to render the decals from the VB.

- The number of decals is actually not unlimited: it’s limited by the size of the cycling VB. At the moment it’s big enough for 100.000 vertices. The buffer is cycling, meaning we wrap around the end of the VB and start overwriting its beginning when necessary. Old (overwritten) decals become automatically obsolete when the mesh tries to enumerate them and figures out their address (index) has been invalidated. There’s no need to remove decals from the VB when a mesh gets deleted: it will simply never be rendered anymore, we’ll not call “RenderMeshDecals” for it, hence its decals won’t get rendered. They will still be in the VB, but only until we overwrite them with new decals (i.e. it’s all automatic, nothing to do).

And that’s about it. Here’s an old picture with ~10.000 decals randomly spawned in the scene (old scene from Explora)

February 17th, 2008

Babelfish sucks. It translated this simple sentence into exactly the opposite of what it meant.

February 16th, 2008

February 16th, 2008

What is the most used feature of a level editor? I’ll tell you: object selection - a.k.a. picking. Point and click, point and click, all the time. So it would make sense to polish this part of the editor as much as possible, right? Yet I saw a number of in-house editors whose picking was surprisingly tedious to use. (Not that commercial editors are any better in that respect: 3DS MAX has probably the worst picking I’ve ever seen).

Good picking 101:

The two first ones are obvious so I’m not gonna cover that.

Backface culling should be obvious as well, but it’s not. The problem is that there are two different backface culling to consider: the one based on geometry, and the one based on materials. Geometric culling happens at the triangle level, without taking the triangle’s material into account. The triangle can be CW or CCW and the ray-vs-triangle code usually only works against one kind of triangles only - or it skips the test completely and considers the triangle double-sided. Now, regardless of this, a triangle can have a material whose culling render state (typically D3DRS_CULLMODE) is completely different from the triangle’s “natural” (geometric) culling. Needless to say, it wrecks havoc on the poor picking code, and it’s easy to end up with a visible triangle on screen that you just can’t select. For example it might be a CCW triangle with a CULL_NONE render state, failing to pass the geometric test. Or the opposite: a proper CW triangle with a CCW material, meaning the user can select a triangle he doesn’t see. Yes, it’s a mess. And yes, it happens. Often. Hence the rant. Fixing it is easy: the backface culling code has to consider both the triangle’s orientation (CW/CCW) and its rendering properties. It’s painful to do if you rely on an opaque physics engine to cast a ray through the scene for you, but that’s the price to pay for a good, solid picking experience.

But wait, there’s more. The most frustrating picking issue ever is when you clearly see an object in the viewport, you click it… and nothing happens. It just selects another object. It selects an object behind the camera. Or is it, really? What usually happens is that the camera’s near clip plane is not zero (zero is bad), and as a result any geometry located between the camera’s position and the near clip plane is not rendered. However, the raycast used to pick up objects typically starts directly from the camera’s position. In other words, the ray can hit objects that are not even rendered and visible to users. In a level editor where the camera is free to go everywhere, through walls and everything, this actually happens a lot! And it creates a lof of pain for the poor users. Fixing it is easy: if your near clip plane is set to N, start the raycast N units away from the camera’s position. That’s all!

February 16th, 2008

I recently posted an article from last year, about the “sweep and prune” algorithm. I previously posted it on the Bullet forums but couldn’t upload it on CoderCorner for technical reasons.

Today, I finally found some time to put the source code together, extract it from ICE, and upload it as well. So, here it is. It’s an efficient implementation of an array-based sweep-and-prune. It should be fast. In my tests I found it faster than my previous version from OPCODE 1.3, and also slightly faster than the PhysX implementation. For technical details about this new version, please refer to the document above.

The library doesn’t support “multi-SAP” yet, I will need to find more time to seat and re-implement that in a releasable form. Oh well, later.

February 16th, 2008

Re-inventing square wheels is a waste of time. On the other hand, using standard libraries makes your product look and feel like all the others. I don’t want to have a standard product. I want to crush the competition with bright polished shiny wheels that they don’t have.

February 15th, 2008

So apparently they do want to port it to CUDA. I admit I’m curious and slightly skeptical. I don’t know if they are porting the software part of the SDK to CUDA, or the hardware part. I see problems for both of them. In particular, the convex-vs-mesh algorithm in software was quite painful to write, and I would be amazed if a direct conversion actually works on a GPU.

But if it does, wow.